What is Service-Oriented Architecture (SOA)? Explain data center and its features along with Open-Source Software in data centers.

Service-Oriented Architecture (SOA)

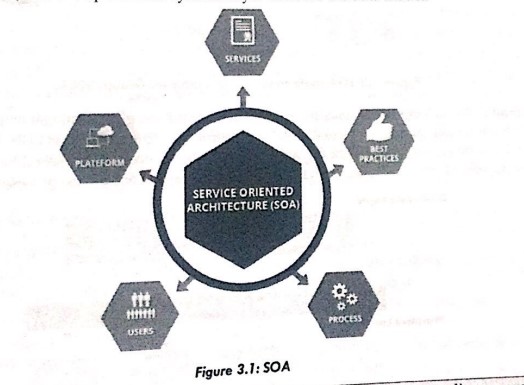

Service-Oriented Architecture (SOA) is an architectural approach that allows diverse applications to communicate independently of platform, implementation language, or location by leveraging generic and dependable services that may be utilized as application building blocks. SOA encompasses approaches and strategies for developing complex applications and information systems. SOA differs from traditional architecture in that it has its own set of architectural traits and rules. SOA combines a group of loosely coupled black box components to offer a well-defined degree of service.

DATA CENTER

- A data center is a storage facility that holds computer facilities such as servers, routers, switches, and firewalls, as well as supporting components such as backup equipment, fire suppression systems, and conditioning. A data center can be complicated (dedicated building) or simple (dedicated building) (2 area or room that houses only a few servers). A data center can also be private or shared.

- Datacenter components are frequently at the heart of an organization's information system (IS). As result, these important data center facilities often need a substantial investment in supporting technologies such as air conditioning/climate control systems, fire suppression/smoke detection, secure entrance am identification, and elevated floors for easier cabling and water damage avoidance.

- When data centers are shared, allowing virtual data center access to numerous organizations and individuals frequently makes more sense than allowing entire physical access to multiple companies or persons. Shared data centers are often owned and managed by a single organization that rents out cent partitions (virtual or physical) to other client businesses. Client/leasing organizations are frequently time businesses that lack the financial and technical capabilities necessary for dedicated data center upkeep The leasing option enables smaller enterprises to benefit from professional data center capabilities without incurring large capital expenditures.

- A data center is a location where an organization's IT operations and equipment are centralized, as well as where data is stored, managed, and disseminated. Data centers hold a network's most crucial systems a are important to the day-to-day functioning of the network. As a result, corporations prioritize the security and dependability of data centers and associated information.

- Although each data center is unique, it may typically be divided into two types: internet-facing data centers and business (or "internal") data centers. Internet-facing data centers often handle a small num of apps, are browser-based, and have a large number of unknown users. Enterprise data centers, on other hand, serve fewer users but host more applications ranging from off-the-shelf to bespoke applications.

- Datacenter designs and needs can vary greatly. A data center designed for a cloud service provider, such as Amazon EC2, has facility, infrastructure, and security needs that differ dramatically from a wholly private data center, such as one designed for the Pentagon to secure sensitive data.

Features of data center

- Infrastructure and location: Once you have decided how much data storage you would like to outsource to a data center, you can use it as a measure to determine what type of infrastructure you will require. The type of servers utilized, the location of the center, and the configuration of the servers and networks are all factors that might influence your choices.

- Efficiency and Reliability: Power backup is critical, and it is also beneficial to understand how this backup is set to your servers. For the efficient running of your organization, the data center's dependability should be at least 99.995%, and this should be backed by easy access to your data for all processes.

- Data redundancy for unexpected situations: The data center should guarantee redundancy of your stored data in the event of an emergency or as part of a disaster recovery plan. Discuss with the data center personnel to ensure that all failure situations, such as those outlined in this article, have been considered while designing the data center.

- Data security in all aspects: The data center must ensure the physical and virtual security of your data. You must go there in person to evaluate each of these features before making your decision.

- Access and connectivity for smooth functioning: Because the data housed in a data center is likely to be more than a static block and will need to be accessible for your company's purposes, the ease of access must also be addressed. The center's network connectivity is also significant in deciding this.

- Scalability to support growth: As your company expands, so will its data storage requirements. The methods and speed of obtaining this data would also need to evolve in tandem with this expansion. Check to see whether the data center has plans for future development and can give you these additional resources on short notice.

- Manageability: A data center should allow for the simple and seamless management of all of its components. This can be accomplished through automation and a reduction in human interaction in routine chores.

- Availability: A data center should guarantee that information is available when needed. It simply implies that there will be no downtime. Unavailability of information might cost businesses a lot of money every hour.

- Security: To prevent unauthorized access to information, all rules, processes, and core element integration work together.

- Scalability: Business expansion nearly always necessitates the deployment of more servers, new applications, databases, etc. so infrastructure needs to be scalable.

- Performance: The goal of performance management is to ensure that all aspects of the DC work optimally to meet the desired service levels.

- Data integrity: Ascertain that data is saved and retrieved in the same manner in which it was received.

- Monitoring: It is a continual process of obtaining information about the numerous parts and services that are active in the data center. The purpose is self-evident: to forecast the unanticipated.

- Reporting: Performance, capacity, and utilization gathered together at a point in time.

OPEN-SOURCE SOFTWARE IN DATA CENTERS

- The Open-Source Definition is used by the Open-Source Initiative to verify if a software license is genuinely open source. The phrase "open source" refers to a form of software licensing that makes source code available to the public without imposing significant copyright limitations. The defined standards make no mention of trademark or patent usage, and no collaboration is required to guarantee that any shared audit or release system applies to any derivative products. It is regarded as an explicit "feature" of open source that has no limits on usage or distribution by any organization or person. It restricts this in principle to ensure continuous access to derivative works, even for the significant original creators. Over the last decade, there has always been a desire for software that is free, dependable, and adaptable to individual demands.

- Open-source distributions like Red Hat, OpenSuSE, and others, together with open-source software Apache, MySQL, and dozens of others, have long been used to power databases, web, email, and servers. However, because the programs utilized in a data center have such a large influence, many implementers have been hesitant to deploy open-source software-until now. More than a few users have recently been vocal proponents of the fact that open source can and does function in the data center environment.

- An Enterprise Service Bus (ESB) is a software architecture that is often implemented using middleware infrastructure technologies. ESBs are often built on well-known standards and provide core services to complicated systems via an event-driven, standards-based messaging engine (called the bus since transforms and transports the messages across the architecture).

- Apache Synapse, a lightweight and easy-to-use open-source ESB, provides a wide variety of administration, routing, and transformation features. It is considered quite versatile and can be used in a wide range of environments because it supports HTTP, SOAP, SMTP, FTP, and file system transports. supports Web Services Addressing, Web Services Security, Web Services Reliable Messaging, efficient binary attachments, and important transformation standards including XSLT, XPath, and XQuery Synapse has a lot of useful functions out of the box, but it may also be enhanced with common programming languages such as Java, JavaScript, and Ruby.

- Another example is the Open ESB project, which offers an enterprise service bus runtime along with sample service engines and binding components. With Open ESB, corporate applications and web services may be easily integrated as loosely connected composite applications. This enables a company to construct and recomposes composite applications in real time, reaping the benefits of a genuine service-oriented design. Today, most open-source users feel that these products have attained a degree of maturity! comparable to, and in some cases superior to, their commercial equivalents. Open-source goods have pushed commercial suppliers to compete on pricing and service quality. Because open-source code is accessible and visible, developers may troubleshoot difficulties and learn how other developers have dealt with challenges. Users can utilize these solutions throughout their businesses and around the globe without having to track client licensing.

OPEN-SOURCE SOFTWARE IN DATA CENTERS

Web

Apache, Jetty, Laminas Project (formerly Zend Framework), etc.

Database

MySQL, PostgreSQL, MariaDB, MongoDB, SQLite, CouchDB etc.

Application

Zope, Plone, Apache Struts, etc.

Systems and Network Management

OpenQRM, Zenoss, Linux Virtual Server Load Balancer, DNS-based role management clusters, Dispatcher-Based Load Balancing Clusters, etc.

Virtualization

Xen Server, VirtualBox, PROXMOX, LINUX-KVM, oVirt, Client Hyper-V, etc.

Comments

Post a Comment