What is GFS? Explain the features of GFS.

Google File System (GFS)

- The Google File System is a scalable distributed file system designed for big data-intensive distributed Applications. It offers fault tolerance while running on low-cost commodity hardware and gives great distributed file systems, its design has been influenced by observations of application workloads and the aggregate performance of a large number of customers. While GFS has many of the same aims as past technical environments, both current and prospective, which represent a significant divergence from Certain preceding file system assumptions. As a result, established options have been reexamined, and Grammatically alternative design points have been explored.

- Google File System (GFS) is a scalable distributed file system (DFS) designed by Google Inc. to meet Google's growing data processing needs. GFS supports huge networks and linked nodes with fault tolerance, dependability, scalability, availability, and performance. GFS is comprised of storage systems constructed from low-cost commodity hardware components. It is designed to meet Google's various data consumption and storage requirements, such as their search engine, which creates massive volumes of data that must be kept. The Google File System took advantage of the strengths of off-the-shelf servers while reducing hardware flaws. GoogleFS is another name for GFS.

- The storage requirements were satisfied satisfactorily by the file system. It is widely utilized within Google as a storage platform for the collection and processing of data utilized by our services as well as research and development projects requiring big data sets. The biggest cluster to date has hundreds of terabytes of storage spread across thousands of disks on over a thousand servers, and it is used concurrently by hundreds of clients.

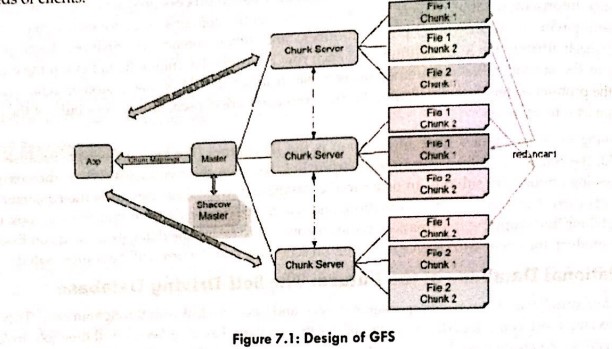

- The GFS node cluster consists of a single master and numerous chunk servers that are constantly accessed by various client systems. Data is stored on local drives by chunk servers as Linux files. Data is stored in big chunks (64 MB), which are duplicated at least three times on the network. Because of the huge chunk size, network overhead is reduced.

- GFS is intended to meet Google's huge cluster requirements without burdening apps. Pathnames are used to identify files in hierarchical folders. The master controls metadata such as namespace, access control data, and mapping information by interacting with and monitoring the status updates of each chunk server via scheduled heartbeat messages.

GFS Features Include

- Fault tolerance

- Critical data replication

- Automatic and efficient data recovery High aggregate throughput

- Reduced client and master interaction because of large chunk server size

- Namespace management and locking

- High availability

OR,

The features of the Google file system are as follows:

• GFS was designed for high fault tolerance.

• Master and chunk servers can be restarted in a few seconds and with such a fast recovery capability, the window of time in which data is unavailable can be greatly reduced.

• Each chunk is replicated in at least three places and can tolerate at least two data crashes for a single chunk of data.

• The shadow master handles the failure of the GFS master.

• For data integrity, GFS makes checksums on every 64KB block in each chunk.

• GFS can achieve the goals of high availability, high performance, and implementation.

• It demonstrates how to support large-scale processing workloads on commodity hardware designed to tolerate frequent component failures optimized for huge files that are mostly appended and read.

The biggest GFS clusters have over 1,000 nodes with a total disk storage capacity of 300 TB. Hundreds of people can use it constantly.

Comments

Post a Comment