What are the types of applications that can benefit from cloud computing?/Explain the applications of Cloud Computing in various fields with proper examples.

Applications that can benefit from cloud computing

1) Scientific Applications

- Scientific applications have been run on both traditional high-performance computing (HPC) systems such as supercomputers and clusters, as well as high throughput computing (HTC) platforms such as Grids, for many years. Many businesses have employed classical HPC to assist tackle a range of problems since they have access to enormous quantities of computer power. Although these systems were often built to solve a specific problem, a growing number of SMEs and even university departments began to use general-purpose HPC systems. Scientists, engineers, system administrators, and developers have been investigating HPC Cloud environments to take advantage of what Cloud Computing has to offer them as new growing technology.

- Running complex scientific applications has become more accessible to the research community thanks to the popularity of Cloud Computing, which allows researchers to access on-demand compute resources in minutes rather than waiting in queues for their compute jobs and experiencing peak demand bottlenecks. Cloud Computing has a lot of promise for scientific applications, but it was built for business and web applications, which have different resource requirements than communication-intensive, tightly coupled scientific applications, which typically require low latency and high bandwidth interconnections, as well as parallel file systems.

a) Healthcare: ECG Analysis in the Cloud

Scientific Applications

- Cloud computing is getting involved in scientific applications and because of this the resources and storage are not available infinitely at reasonable prices.

- Details of Computing Categories such as High-Performance Computing (HPC) and High Throughput were already discussed in the task programming section of Unit V.

High-Performance Computing: This term signifies computing with high performance. High performance in terms of resource availability, quality of service, monitoring of service, etc.

High Throughput Computing: High-throughput computing (HTC) refers to the usage of a large number of computing resources over a lengthy period to complete a complex computational job.

Healthcare: ECG (Electrocardiogram) Analysis in cloud computing

Healthcare is a field, subject, sector, or territory in which information technology has several uses. These apps are being used to aid businesses in supporting scientists in developing disease prevention solutions. Cloud computing has emerged as an appealing alternative for constructing health monitoring systems as a result of the development of the Internet or to put it another way, as a result of the availability of the internet. The ECG machine, for example, is a health monitoring device that measures the human body's heartbeat and prints the results on graph paper.

The electrocardiogram (ECG) measures the electrical activity of the heart's Cardium. A waveform is created as a result of this action, which is repeated throughout time and symbolizes the heartbeat.

- The analysis of the shape is used to identify arrhythmias, and it is the most common way of detecting heart diseases.

- Here the meaning of arrhythmias means "not having a steady rhythm", and "an arrhythmic heartbeat" means a heartbeat that is not in its rhythm.

Now we will let this concept enter into cloud computing.

- Cloud computing technologies allow the remote monitoring of a patient's heartbeat data. In this way, the patient at risk can be constantly monitored without going to the hospital for ECG analysis.

- At the same time, the Doctors can instantly be notified of cases that need their attention.

- In this figure, there are different types of computing devices equipped with ECG sensors to constantly monitor the patient's heartbeat.

- The respective information is transmitted to the patient's mobile device that will immediately be forwarded to the cloud-hosted web services for analysis.

- The entire web service from the front end of a platform that is completely hosted in the cloud consists of three layers: SaaS, PaaS, and IaaS.

Advantages

- The first advantage is the elasticity of the cloud infrastructure that can minimize and maximize according to the requests served.

- The second advantage is that cloud computing technologies now become easily accessible and also it promises to deliver the services with minimum time.

- As a result, doctors do not need to invest in large computing infrastructures.

2. BIOLOGY: PROTEIN STRUCTURE PREDICTION (PSP)

- Cloud computing is a new technology that allows users to access a variety of computer services on demand. It gives users easy access to a pool of higher-level services and other system resources. Cloud computing has become more important in the realms of geology, biology, and other scientific studies.

- The finest example of a study area that uses cloud technologies for processing and storage is protein structure prediction. A protein is made up of peptide bonds that connect lengthy sequences of amino acids. The varied structures of proteins aid in the development of novel therapeutics, and Protein structure prediction is the prediction of various sequences of proteins based on their three-dimensional structure.

- Protein primary structures are created first, and secondary, tertiary, and quaternary structures are predicted from the fundamental structure. Protein structural predictions are made in this manner. Protein structure prediction employs a variety of different technologies, including artificial neural networks, artificial intelligence, machine learning, and probabilistic approaches, and is crucial in disciplines such as theoretical chemistry and bioinformatics.

Protein

- Proteins are long chains of amino acids that form the basis of all life. They are large molecules that our cells need to function properly. They consist of amino acids. The structure and function of our bodies depend on proteins. The regulation of the body's cells, tissues, and organs cannot happen without them.

- Muscles, skin, bones, and other parts of the human body contain significant amounts of protein, including enzymes, hormones, and antibodies.

- The human body consists of around 100 trillion cells. Each cell has thousands of different proteins. Together, these cause each cell to do its job. The proteins are like tiny machines inside the cell.

Why cloud computing for PSP?

- It requires high computing capabilities and often operates on large data- sets that cause extensive I/O operations.

- Protein structure prediction is a computationally intensive task that is fundamental to different types of research in the life sciences.

- Manually 3D structure determination is difficult, slow, and expensive.

- Structure helps in the design of new drugs for the treatment of diseases.

- The geometric structure of a protein cannot be directly inferred from the sequence of genes that compose its structure, but it is the result of complex computations aimed at identifying the structure that minimizes the required energy. While doing so, high computational power is required which is extremely expensive to own.

- Cloud computing grants access to such capacity on pay per use basis.

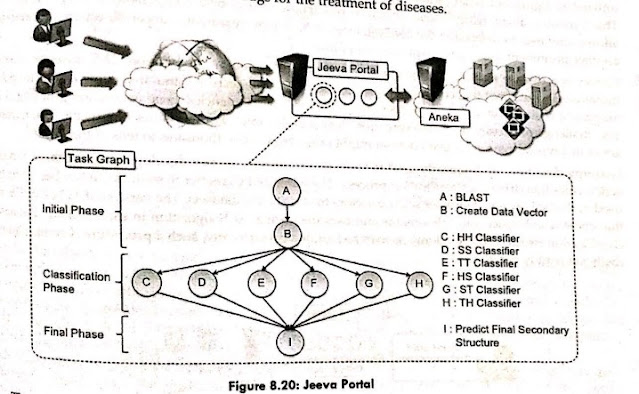

- Jeeva, an integrated Web site that allows scientists to outsource the prediction process to a computing cloud based on Aneka, is one project that studies the use of cloud technology for protein structure prediction. Machine learning approaches are used in the prediction job to determine the secondary structure of proteins. These methods turn the problem into a pattern recognition issue, in which a sequence must be sorted into one of three categories (E, H, and C). The pattern recognition issue is divided into three steps by a popular method based on support vector machines: initialization, classification, and a final phase.

- Even though all three stages must be completed in order, it is possible to use parallel execution in the classification step, where many classifiers are run simultaneously. This opens up the possibility of reducing the prediction's computing time sensibly. After that, the prediction method is converted into a task graph, which is then sent to Aneka. The middleware makes the findings accessible for display through the portal once the task is done.

- Protein structure prediction may be done using a variety of techniques and methods. CASP (Critical Assessment of Protein Structure Prediction) is a well-known technology that gives ways for automated web servers, and research findings are stored in clouds such as the CAMEO (Continuous Automated Model Evaluation) server. These servers may be accessible by anybody at any time and from any location, depending on their needs. Phobius, FoldX, LOMETS, Prime, Predict Protein, SignalP, BBSP, EVfold, Biskit, HHpred, Phone, and ESyired 3D are some of the tools or services used in protein structure prediction. New structures are anticipated using these technologies, and the findings are stored on cloud-based. servers.

Biology: Gene Expression Data Analysis

- Gene expression profiling is the simultaneous assessment of thousands of genes' expression levels. It is utilized to figure out what biological processes are activated at the cellular level by medicinal therapy. This function, along with protein structure prediction, is a critical component of drug design since it allows scientists to determine the consequences of a certain treatment. Cancer detection and therapy are another prominent use of gene expression profiling.

- Cancer is a disease marked by uncontrolled cell multiplication and expansion. This arises as a result of mutations in the genes that control cell development. This suggests that mutated genes can be found in all malignant cells. To offer a more precise categorization of malignancies, gene expression profiling is used. The challenge of classifying gene expression data samples into various classes difficult. The number of genes in a typical gene expression dataset might range from a few thousand to tens of thousands.

- Learning classifiers are frequently used to solve this challenge, as they develop a population of condition action rules that drive the classification process. The eXtended Classifier System (XCS) has been effectively used in the biology and computer science sectors to classify big datasets. The version of XCS, COXCS, splits the whole search space into subdomains and uses the normal XCS algorithm in each of them. Because the classification issues on the subdomains may be handled concurrently, such a procedure is computationally costly yet readily parallelized.

- Cloud-CoXCS is a cloud-based CoXCS solution that uses Aneka to solve classification problems in parallel and assemble the results. The algorithm is guided by strategies, which specify how the outputs are combined and if the process is complete.

3. GEOSCIENCE: SATELLITE IMAGE PROCESSING

- Massive volumes of geographic and non-spatial data are collected, produced, and analyzed by geoscience applications. The volume of data that has to be processed grows considerably as technology advances and our world gets increasingly instrumented (e.g., through the deployment of sensors and satellites for monitoring). A fundamental component of geoscience applications is the geographic information system (GIS). All sorts of spatially linked data may be captured, stored, manipulated, analyzed, managed, and presented using GIS applications. This sort of data is becoming increasingly important in a range of application sectors, ranging from advanced agriculture to civic security and natural resource management. As a result, large amounts of geo-referenced data are fed into computer systems for processing and analysis. Cloud computing is a compelling alternative for completing these time-consuming processes and collecting useful data to aid decision-making.

- Hundreds of terabytes of raw pictures are generated by satellite remote sensing, which must be processed before being used to create a variety of GIS products. This procedure needs both I/O and computationally heavy operations. Large pictures must be sent from a ground station's local storage to compute facilities, where they must undergo multiple transformations and adjustments. Cloud computing offers the necessary infrastructure to support these types of applications. Several technologies are integrated throughout the full computer stack in the system depicted in Figure.

- A SaaS application is a bundle of services that may be used for activities like geocoding and data visualization. Aneka manages the data importation into the virtualized infrastructure and the image processing processes that create the necessary result from raw satellite photos at the PaaS level. The platform uses a Xen private cloud and Aneka technology to dynamically provision (i.e., increase or shrink) used to offload heavy workloads from local computer facilities and use more elastic computing e appropriate resources on demand. The research shows how cloud computing technologies may be used to offload heavy workloads from local computer facilities and use more elastic computing infrastructures.

4. BUSINESS AND CONSUMER APPLICATIONS

5. CRM and ERP

6. Social Networking

- In the previous several years, social networking programs have evolved to become the most popular websites on the Internet. Twitter and Facebook have used cloud computing technology to maintain their traffic and smoothly serve millions of users. For social networks that are continually growing their user base, the ability to expand capacity while systems are operating in the most appealing aspect.

- With over 1 billion members, Facebook is perhaps the most visible and intriguing social networking platform. To keep up with this enormous expansion, Facebook has needed to be able to expand capacity and build new scalable technologies and software systems while retaining high speed to provide a seamless user experience. Multiple data centers are required to support systems with a large number of users. They require highly efficient infrastructure. In the case of Facebook, such a platform largely supports the system's major functionality while also providing APIs for integrating third-party apps - such - as social games and quizzes made by others.

- LAMP is the foundation of the basic reference stack that serves Facebook (Linux, Apache, MySQL, and PHP). This set of technologies is complemented by a suite of additional in-house services. These services are written in several languages and include features like search, news feeds, alerts, and analytics, among others. The user's social graph is built while serving page requests. The social graph identifies a set of interconnected data that is relevant to a certain person. The majority of the user data is retrieved via a distributed cluster of MySQL instances, which are largely key-value pairs. After then, the data is cached for speedier retrieval. The rest of the pertinent data is subsequently put together utilizing the previously described services. These services are closer to the data and are written in languages that are more performant than PHP. A collection of domestically built tools aids in the creation of services.

Comments

Post a Comment