What is MPI? What are its main characteristics? Describe.

MPI applications

- The Message Passing Interface (MPI) is a de facto standard for creating parallel applications that interact with efficient message-passing. This standard can be used on current HPC clusters. The issue with a computer cluster is that although some CPUs share a memory, others have a dispersed memory architecture and are only connected by a network. Interface specifications have been defined and implemented for C/C++ and Fortran Developers these days can employ distributed memory, shared memory, or a hybrid system of the two, all with the power of MPI. There have been several MPI libraries available through the years, and some of the most popular libraries are MPICH, MVAPICH, Open MPI & Intel MPI, etc.

MPI provides developers with a set of routines that/Characteristics are:- :

- Manage the distributed environment where

- MPI programs are executed Provide facilities for point-to-point communication

- Provide facilities for group communication

- Provide support for data structure definition and memory allocation

- Provide basic support for synchronization with blocking calls

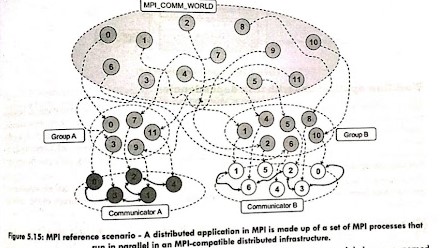

- MPI-compatible distributed infrastructure. MPI apps that use the same MPI runtime are automatically assigned to a global group named MP_COMM_WORLD. In that group, the distributed processes have a unique identifier that allows the MPI runtime to localize and address them. Specific groups can also be formed as subsets of this global group.

- Individual MPI process has its rank within the associated group and the rank is a unique identifier that allows processes to communicate within the group. Communication is enabled through a communicator component that may be customized for each group. The parallel part of the code is readily recognizable by two actions that set up and shut down the MPI To develop the environment, respectively.

- All MPI functions can be used to transmit or receive messages in either an MPI application and the code for the MPI process that will be performed in parallel must be asynchronous or synchronous mode in the code block specified by these two actions.

Comments

Post a Comment